Widget Details: QA Benchmark

The QA Benchmark widget enables you to compare QA score averages for agents, inContact Groups, and forms against a benchmark entity you have selected.

For more information, see inContact WFO Widgets Overview.

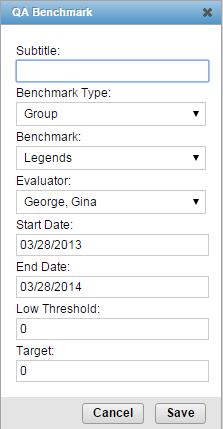

Widget Settings

- Subtitle

- Allows you to create a title for this instance of the widget. The title can be no more than 100 characters, including spaces and punctuation.

- Benchmark Type

- Allows you to select the type of benchmark entity for this instance of the widget. The field offers the values Agent, Group, or Form from a drop-down list, with Agent as the default. The Group value refers to inContact Groups.

- Benchmark

- Allows you to select the specific benchmark for this instance of the widget. The field offers values in a drop-down list based on your Benchmark Type selection, and limited by your access permissions. For example, if you choose a Benchmark Type of Group, the Benchmark list will display inContact Groups to which you have access.

- Evaluator

- Allows you to filter the widget display by evaluator. The field offers a drop-down list of evaluators in your system. The default value is Any.

- Start Date

- Allows you to filter the widget display based on a date range. The field offers a date selector you can use to choose a starting date for the range.

- End Date

- Allows you to filter the widget display based on a date range. The field offers a date selector you can use to choose an ending date for the range.

- Low Threshold

- Allows you to configure the lowest acceptable score for benchmarking purposes. The field accepts positive numeric values.

- Target

- Allows you to configure the optimal minimum score for benchmarking purposes. The field accepts positive numeric values.

Filter settings stay in effect after you have logged out of inContact WFO or moved to another page. The combination of Low Threshold and Target values determine the look of the display.

Widget Display

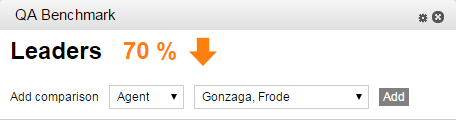

The display shows the performance of the benchmark entity at a glance. The colors in the display show the placement of the benchmark as compared to these user-defined thresholds:

- Red — below the lowest acceptable low score (Low Threshold)

- Yellow — between the Low Threshold and the optimal minimum score (Target)

- Green — above the Target

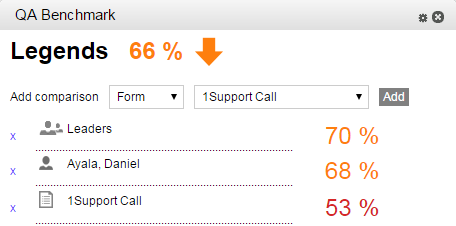

For example, in the image shown here, the benchmark entity is the Legends group. The group's average QA score is below the Target but above the Low Threshold.

These rules determine the average QA score (all data is limited by the agents, groups, and forms to which you have access):

- If Agent is the benchmark, then the average is for all completed QA evaluations for the agent, regardless of form, for the specified time period. If an evaluator was specified, only evaluations done by that evaluator in included in the calculation.

- If Group is the benchmark, then the average is for all completed QA evaluations for all forms and agents in the group for the specified time period. If an evaluator was specified, only evaluations done by that evaluator in included in the calculation.

- If Form is the benchmark, then the average is for all completed QA evaluations for that form for all agents for the specified time period. If an evaluator was specified, only evaluations done by that evaluator are included in the calculation.

You can add an unlimited number of comparison entities to the widget using the Add Comparison lists, and remove them by clicking the x on that entity's line.

In the preceding image, the average QA scores over the defined date range are shown for another group (Leaders), for a single agent (Daniel Ayala), and for a specific evaluation form (1Support Call). You can thus compare these scores to the benchmark entity, the Legends group.